AWS ECS Fargate

Overview

Lacework’s workload security provides visibility into all processes and applications within an organization’s cloud environments such as workload/runtime analysis and automated anomaly and threat detection.

After you install the Lacework agent, Lacework scans hosts and streams select metadata to the Lacework data warehouse to build a baseline of normal behavior, which is updated hourly. From this, Lacework can provide detailed in-context alerts for anomalous behavior by comparing each hour to the previous one. Anomaly detection uses machine learning to determine, for example, if a machine sends data to an unknown IP, or if a user logs in from an IP that has not been seen before.

Lacework offers two methods for deployment into AWS ECS Fargate. The first method is a container image embedded-agent approach, and the second is a sidecar-based approach that utilizes a volume map. In both deployment methods, the Lacework agent runs inside the application container.

For embedded-agent deployments, we recommend a multi-stage image build, which starts the Lacework agent in the context of the container as a daemon.

For sidecar-based deployments, the Lacework agent sidecar container exports a storage volume that is mapped by the application container. By mounting the volume, the agent can run in the application container namespace.

Prerequisites

- AWS Fargate platform versions 1.3 or 1.4.

- A supported OS distribution, see public documentation for supported distributions.

- Lacework agent version 3.2 or later.

- The ability to run the Lacework agent as root.

- Your application container must have the

ca-certificatespackage (or equivalent global root certificate store) in order to reach Lacework APIs. As of 2021, the root certificate used by Lacework isGo Daddy Root Certificate Authority - G2.

Access Tokens

In an environment with mixed Fargate and non-Fargate Lacework deployments, Lacework recommends using separate access tokens for each of these deployments. This can make deployments easier to manage.

note

There are multiple methods to work with access tokens for Fargate containers. This includes building the container with the access token within the image or injecting the access token, either statically or as a secret, as an environment variable in the Task Definition setup.

The recommended approach is to use AWS Secrets Manager to inject an environment variable through Task Detection. Both of these approaches are demonstrated in the following examples, and either token management approach works across both deployment methods.

Agent Server URL

If you are a non-US user, you must configure the agent server URL. You can find the available URLs in the Agent Configuration docs.

There are two options for this:

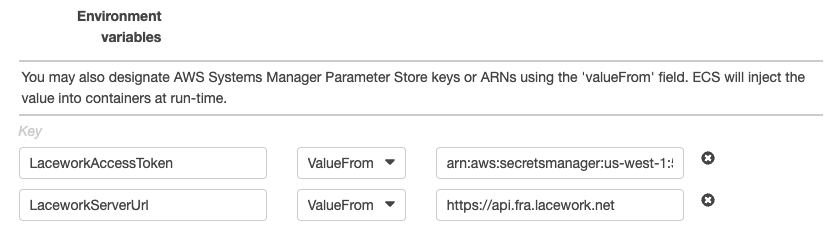

When using the

lacework-sidecar.shscript you can use theLaceworkServerUrlenvironment variable in your task definition to set this value. For example, this can be done in the container definition of your main container (not the datacollector-sidecar container):

If you manage the lacework configuration file (

/var/lib/lacework/config/config.json) yourself, you can find a configuration example in the Agent Configuration docs as well.

Deployment Methods

Lacework supports two deployment methods using ECS AWS Fargate:

- Embedded-Agent Deployment.

- Sidecar-Based Deployment, using a volume map approach.

Embedded-Agent Deployment

Directly including the Lacework agent is possible in scenarios where Lacework agents can be directly embedded into the container images you build. The following steps are based on AWS ECR deployment, but you can use similar steps for other registry types.

This consists of three high level steps including modifying your Dockerfile to include a multi-stage build with the Lacework agent, building and pushing the new image, and deploying.

Step 1: Add the Agent to your Existing Dockerfile

This step consists of (a) adding a build stage, (b) copying the Lacework agent binary, and (c) setting up configurations.

The following is a full example of a very simple Dockerfile along with its entrypoint script. This example adds three lines and comments indicating the Lacework agent additions.

note

This example injects the access token into the image, but the access token could also be loaded as an environment variable instead. The secret can also be passed as an environment variable using AWS Secrets Manager.

# syntax=docker/dockerfile:1

# Lacework agent: adding a build stage

FROM lacework/datacollector:latest-sidecar AS agent-build-image

FROM ubuntu:latest

RUN apt-get update && apt-get install -y \

ca-certificates \

&& rm -rf /var/lib/apt/lists/*

# Lacework agent: copying the binary

COPY --from=agent-build-image /var/lib/lacework-backup /var/lib/lacework-backup

# Lacework agent: setting up configurations

RUN --mount=type=secret,id=LW_AGENT_ACCESS_TOKEN \

mkdir -p /var/lib/lacework/config && \

echo '{"tokens": {"accesstoken": "'$(cat /run/secrets/LW_AGENT_ACCESS_TOKEN)'"}}' > /var/lib/lacework/config/config.json

ENTRYPOINT ["/var/lib/lacework-backup/lacework-sidecar.sh"]

CMD ["/your-app/run.sh"]

The RUN command uses BuildKit to securely pass the Lacework agent access token as LW_AGENT_ACCESS_TOKEN. This is recommended, but not a requirement.

If your tenant is deployed outside the US, specify the serverurl for your region:

- EU =

https://api.fra.lacework.net - ANZ =

https://auprodn1.agent.lacework.net

RUN --mount=type=secret,id=LW_AGENT_ACCESS_TOKEN \

mkdir -p /var/lib/lacework/config && \

echo '{"tokens": {"accesstoken": "'$(cat /run/secrets/LW_AGENT_ACCESS_TOKEN)'"},"serverurl": "https://api.fra.lacework.net"}' > /var/lib/lacework/config/config.json

RUN --mount=type=secret,id=LW_AGENT_ACCESS_TOKEN \

mkdir -p /var/lib/lacework/config && \

echo '{"tokens": {"accesstoken": "'$(cat /run/secrets/LW_AGENT_ACCESS_TOKEN)'"},"serverurl": "https://auprodn1.agent.lacework.net"}' > /var/lib/lacework/config/config.json

note

It is possible to install the Lacework agent by fetching and installing the binaries from our official GitHub repository. Optionally, you may choose to upload the lacework/datacollector:latest-sidecar images into their ECR.

Step 2: Build and Push Image

Now that our image has been defined and created locally, it can be pushed to a container registry such as ECR.

Consider the following example script:

#!/bin/sh

# Set variables for ECR

export YOUR_AWS_ECR_REGION="us-east-2"

export YOUR_AWS_ECR_URI="000000000000.dkr.ecr.${YOUR_AWS_ECR_REGION}.amazonaws.com"

export YOUR_AWS_ECR_NAME="YOUR_ECR_NAME_HERE"

# Store the Lacework Agent access token in a file (See Requirements to obtain one)

echo "YOUR_ACCESS_TOKEN_HERE" > token.key

# Build and tag the image

DOCKER_BUILDKIT=1 docker build \

--secret id=LW_AGENT_ACCESS_TOKEN,src=token.key \

--force-rm=true \

--tag "${YOUR_AWS_ECR_URI}/${YOUR_AWS_ECR_NAME}:latest" .

# Log in to ECR and push the image

aws ecr get-login-password --region ${YOUR_AWS_ECR_REGION} | docker login --username AWS --password-stdin ${YOUR_AWS_ECR_URI}

docker push "${YOUR_AWS_ECR_URI}/${YOUR_AWS_ECR_NAME}:latest"

Step 3: Deploy and Run

Predeployment for AWS ECR

Before attempting to deploy tasks and services using Fargate, ensure you have the following information:

- Subnet ID.

- Security group with correct permissions.

- AWS CloudWatch log group.

- A configured ECS task execution role in IAM:

- An IAM policy, attach the existing

AmazonECSTaskExecutionRolePolicyto your ECS task execution role. - An IAM trust relationship.

- An IAM policy, attach the existing

Task Definition

To run the image, AWS requires the configuration of an ECS task definition. See the AWS documentation on task definitions for more information. Consider the example here, and for more examples, see the AWS documentation.

{

"executionRoleArn": "arn:aws:iam::YOUR_AWS_ID:role/ecsTaskExecutionRole",

"containerDefinitions": [

{

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "/ecs/cli-run-task-definition",

"awslogs-region": "YOUR_AWS_ECR_REGION",

"awslogs-stream-prefix": "ecs"

}

},

"cpu": 0,

"environment": [

{

"name": "LaceworkAccessToken",

"value": "YOUR_ACCESS_TOKEN"

},

{

"name": "LaceworkServerUrl",

"value": "OPTIONAL_SERVER_URL_OVERRIDE"

}

],

"image": "YOUR_AWS_ECR_URI.amazonaws.com/YOUR_AWS_ECR_NAME:latest",

"essential": true,

"name": "cli-fargate"

}

],

"memory": "1GB",

"family": "cli-run-task-definition",

"requiresCompatibilities": [

"FARGATE"

],

"networkMode": "awsvpc",

"cpu": "512"

}

Save this file as taskDefinition.json. Then create the cluster and register the definition with the following commands:

# Create a cluster. You only need to do this once.

aws ecs create-cluster --cluster-name YOUR_CLUSTER_NAME_HERE-cluster

# Register the task definition

aws ecs register-task-definition --cli-input-json file://taskDefinition.json

Diagnostics and Debugging

List Task Definitions

aws ecs list-task-definitions

Create a Service

important

Ensure you specify the correct subnet id (subnet-abcd1234) and securityGroup id (sg-abcd1234).

aws ecs create-service --cluster cli-rsfargate-cluster \

--service-name cli-rsfargate-service \

--task-definition cli-run-task-definition:1 \

--desired-count 1 --launch-type "FARGATE" \

--network-configuration "awsvpcConfiguration={subnets=[subnet-abcd1234],securityGroups=[sg-abcd1234]}"

note

Depending on your VPC configuration, you may need to add a public IP assignPublicIp=ENABLED to the service to be able to pull the example Docker image.

aws ecs create-service --cluster cli-rsfargate-cluster \

--service-name cli-rsfargate-service \

--task-definition cli-run-task-definition:1 \

--desired-count 1 --launch-type "FARGATE" \

--network-configuration "awsvpcConfiguration={subnets=[subnet-abcd1234],securityGroups=[sg-abcd1234],assignPublicIp=ENABLED}"

List Services

aws ecs list-services --cluster cli-rsfargate-cluster

Describe Running Services

aws ecs describe-services --cluster cli-rsfargate-cluster --services cli-rsfargate-service

Agent Upgrades

When using a multi-stage build, the latest agent release is used anytime you rebuild the application container. The agent will also check for and install the latest release while it runs. See Agent Administration for more information.

Sidecar-Based Deployment

You can deploy Lacework for AWS Fargate using a sidecar approach, which allows you to monitor AWS Fargate containers without changing underlying container images. This deployment method leverages AWS Fargate’s Volumes from capability to map a Lacework container image directory into your application image. With this approach, the original application images stay intact and only the task definition to start and configure the Lacework agent is modified.

This consists of 2 main steps, adding a sidecar container and updating the configuration for your application container.

note

This example injects the access token as an environment variable, but the access token could instead be embedded into the image. The secret can also be passed as an environment variable using AWS Secrets Manager.

Requirements

- Understanding of the application image and whether

ENTRYPOINTorCMDis used. - The agent startup script and the agent must be run as

root.

This guide assumes that you have already created a task definition with one application container to run in ECS Fargate. A task definition describes the containerized workloads that you want to run. For details, see creating a task definition.

Step 1: Add the Sidecar to your Task Definition

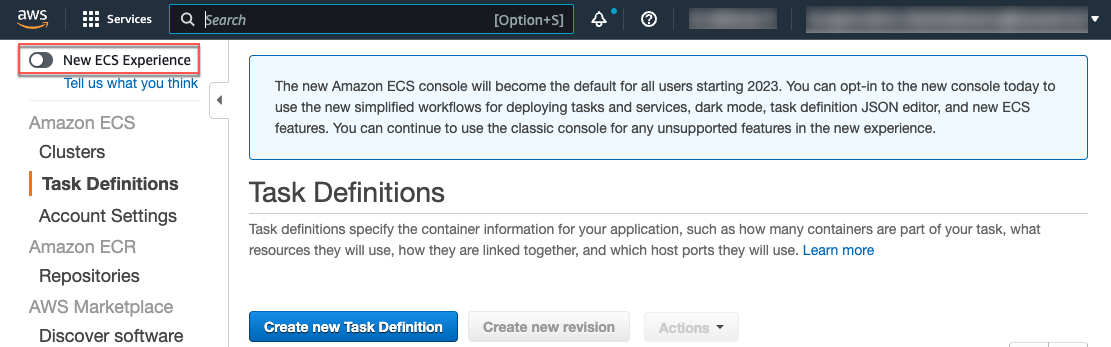

Click Task definitions in the left pane.

Toggle the New ECS Experience slider to switch to the classic AWS console view.

Select the task definition for your application container and click Create new revision.

The Create new revision of Task Definition page appears.

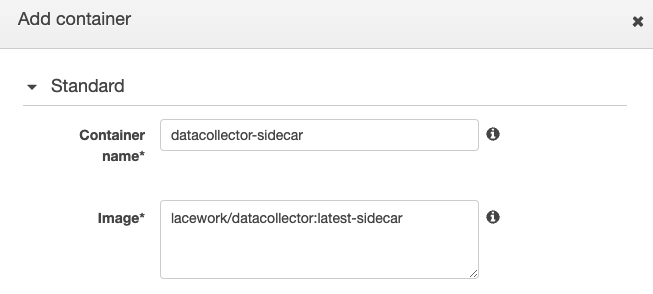

In the Container definitions section, click Add container to add the sidecar container.

The Add container page appears.

In the Container name field, enter

datacollector-sidecar.Set the Image to

lacework/datacollector:latest-sidecar.

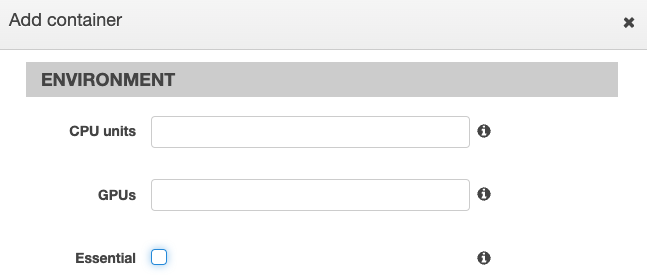

In the Environment section of the Add container page, deselect the Essential checkbox.

Click Add to finish adding the sidecar container and go back to the Create new revision of Task Definition page.

Step 2: Update the Application Container

Before continuing, you must identify whether the container's Dockerfile uses ENTRYPOINT and/or CMD instructions. This is because these values are overridden to run the Lacework agent startup script and then the existing application entry point or command. You can examine the Dockerfile used to build the container to identify ENTRYPOINT and/or CMD instructions. Alternatively, you can use tools to inspect the container image to identify usage. For example, using the Docker Hub web interface, you can inspect the container image layers to identify the usage.

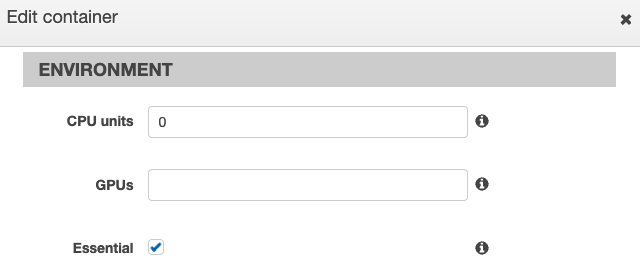

In the Container definitions section of the Create new revision of Task Definition page, click the existing application container name.

The Edit Container page appears.

In the Environment section, do the following:

Ensure that the Essential checkbox is selected.

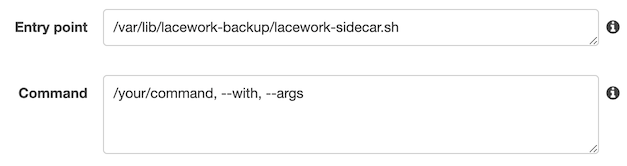

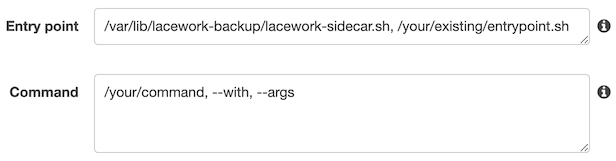

Enter the following in the Entry point field:

/var/lib/lacework-backup/lacework-sidecar.shIf the application container’s Dockerfile has

ENTRYPOINTinstructions, copy the instructions and paste it after/var/lib/lacework-backup/lacework-sidecar.shin the Entry point field as shown in Example (with Both Entrypoint and Command) below.If the application container’s Dockerfile has

CMDinstructions, copy the instructions and paste it in the Command field.Example (with Command Only):

Example (with Both Entrypoint and Command):

note

At runtime, Docker concatenates

ENTRYPOINT+CMDtogether and the sidecar script is designed as a typical entrypoint script (it will execute commands passed to it as arguments).(Optional) Add the

LaceworkAccessTokenandLaceworkServerUrlenvironmental variables.

For more information about the:

LaceworkAccessTokenvariable, see Create Agent Access Tokens.LaceworkServerUrlvariable, see Agent Server URL.

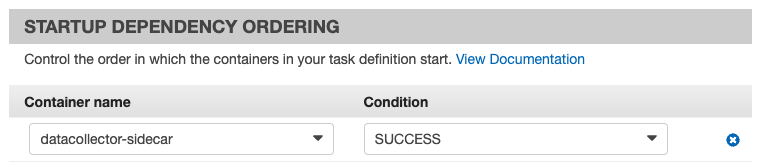

In the Startup Dependency Ordering section, set Container name

datacollector-sidecarto ConditionSUCCESSto ensure that the application container has a dependency on the Lacework sidecar container so that the application container starts only after the sidecar container successfully starts.

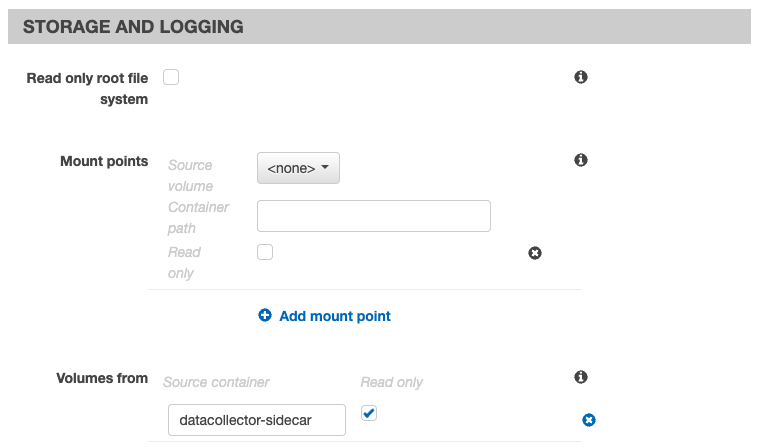

In the Storage and Logging section, do the following to ensure that the application container imports the volume that is exported by the Lacework sidecar container. This makes the volume that contains the Lacework agent executable available in the application container.

- For Volumes from set the Source container to

datacollector-sidecar. - Select the Read only checkbox.

- For Volumes from set the Source container to

Click Update to update the application container and go back to the Create new revision of Task Definition page.

Click Create to create the new revision of the task definition.

The task definition's container definitions now lists both the sidecar and application containers.

Diagnostics and Debugging

Predeployment

Configure the ECS task execution role to include

AmazonECSTaskExecutionRolePolicy.{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:BatchGetImage",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "*"

}

]

}Before you push an image to ECR, ensure that your AWS credentials include the required ECS access policies to push images.

Agent Upgrades

The sidecar container always points to the latest image on hub.docker.com, for example, lacework/datacollector:latest-sidecar. This is updated to the latest stable release of the Lacework agent. Additionally, when the agent is run, it periodically checks if a new version is available.

Example Task Definition JSON

This is an example task definition, with some parts removed to illustrate the relevant configurations.

{

"taskDefinition": {

"taskDefinitionArn": "arn:aws:ecs:YOUR_AWS_REGION:YOUR_AWS_ID:task-definition/datacollector-sidecar-demo:1",

"containerDefinitions": [

{

"name": "datacollector-sidecar",

"image": "lacework/datacollector:latest-sidecar",

"cpu": 0,

"portMappings": [],

"essential": false,

"environment": [],

"mountPoints": [],

"volumesFrom": [],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "/ecs/datacollector-sidecar-demo",

"awslogs-region": "YOUR_AWS_REGION",

"awslogs-stream-prefix": "ecs"

}

}

},

{

"name": "main-application",

"image": "youroganization/application-name:latest-main",

"cpu": 0,

"portMappings": [

{

"containerPort": 80,

"hostPort": 80,

"protocol": "tcp"

}

],

"essential": true,

"entryPoint": [

"/var/lib/lacework-backup/lacework-sidecar.sh"

],

"command": [

"/your-app/run.sh"

],

"environment": [

{

"name": "LaceworkAccessToken",

"value": "YOUR_ACCESS_TOKEN"

}

],

"mountPoints": [],

"volumesFrom": [

{

"sourceContainer": "datacollector-sidecar",

"readOnly": true

}

],

"dependsOn": [

{

"containerName": "datacollector-sidecar",

"condition": "SUCCESS"

}

],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "/ecs/datacollector-sidecar-demo",

"awslogs-region": "YOUR_AWS_REGION",

"awslogs-stream-prefix": "ecs"

}

}

}

],

"family": "datacollector-sidecar-demo",

"taskRoleArn": "arn:aws:iam::YOUR_AWS_ID:role/ecsInstanceRole",

"executionRoleArn": "arn:aws:iam::YOUR_AWS_ID:role/ecsInstanceRole",

"networkMode": "awsvpc",

"revision": 1,

"volumes": [],

"status": "ACTIVE",

"requiresAttributes": [

// Removed for brevity

],

"placementConstraints": [],

"compatibilities": [

"EC2",

"FARGATE"

],

"requiresCompatibilities": [

"FARGATE"

],

"cpu": "512",

"memory": "1024",

"registeredAt": "2021-08-25T06:20:08.202000-05:00",

"registeredBy": "arn:aws:sts::YOUR_AWS_ID:assumed-role/your-assumed-role-name/username"

}

}

Troubleshooting Sidecar Script

You can add the LaceworkVerbose=true environment variable to your task definition which will tail the datacollector debug log.

In most cases, errors occur because either the agent does not have outbound access to the internet, or there are SSL issues indicating your container does not have the ca-certificates bundle installed.

Using AWS Secrets to Pass an Access Token to ECS Task

For scenarios where you do not want to include the access token in your dockerfile, you can pass the access token for your Lacework agent as an AWS secret key to the ECS task. This allows you to avoid having the access token in the dockerfile.

Create an AWS Secret Containing your Access Token

- Open the Secrets Manager console.

- Click Store a new secret.

- Select Other type of secrets.

- Click the Plaintext tab and enter the access token as the value of the secret.

- Click Next.

- Enter a name for this secret (for example,

LaceworkAccessToken) and click Next. - Accept the default Disable automatic rotation selected for Configure automatic rotation, and click Next.

- Review these settings, and then click Store.

- Select the secret you created and save the Secret ARN to reference in your task execution IAM policy and task definition in later steps.

Update Your Task Execution Role

Open the IAM console.

Click Roles.

Search the list of roles and select ecsTaskExecutionRole.

Click Permissions > Add inline policy.

Enter the following JSON text in the JSON tab.

Add a new policy to your role that allows you to read the new secret by specifying the full ARN of the secret you created in the previous step.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"secretsmanager:GetSecretValue"

],

"Resource": [

"arn:aws:secretsmanager:region:aws_account_id:secret:(arn_of_the_secret)

]

}

]

}Where

arn_of_the_secretis a fully qualified secret + key value.

Update the ECS Task

Add the ARN to the ECS task definition:

"secrets": [

{

"valueFrom": "arn:aws:secretsmanager:region:(arn_of_the_secret)",

"name" : "LaceworkAccessToken",

}

],

Where arn_of_the_secret is a fully qualified secret + key value.

The ECS task sets the access token as an environmental variable. The lacework-sidecar.sh script reads the env variable and writes it into the config.json in that instance of the task for the datacollector to read.

Remove Access Token from Dockerfile and Task Definition File

If you use the AWS secret to pass the access token, you must remove the access token definitions from your Dockerfile and Task Definition files.

Remove the access token definition and key value

{"tokens": {"accesstoken": "'$(cat /run/secrets/LW_AGENT_ACCESS_TOKEN)'"}from your Dockerfile, as shown in the following entry:RUN --mount=type=secret,id=LW_AGENT_ACCESS_TOKEN \

mkdir -p /var/lib/lacework/config && \

echo '{}' > /var/lib/lacework/config/config.jsonRemove the access token from your task definition file by deleting the following entry:

"environment": [

{

"name": "LaceworkAccessToken",

"value": "YOUR_ACCESS_TOKEN"

}

],

Fargate Information in the Lacework Console

After you install the agent, it takes 10 to 15 minutes for agent data to appear in the Lacework Console under Resources > Agents.

Fargate Task Information

To view Fargate task information, go to Resources > Agents and the Agent Monitor table. Note that the displayed hostname is not an actual host but rather an attribute (taskarn_containerID). Expand the hostname to display its tags, which contain Fargate task information and additional metadata collected by the agent.

This tag provides the Fargate engine version: VmInstanceType:AWS_ECSVxFARGATE

Where x can be 3 or 4 (1.3 or 1.4 engine respectively).

The following tags are prepended by net.lacework.aws.fargate.

| Tag | Description |

|---|---|

| cluster | The cluster where the task was started |

| family | The task definition family |

| pullstartedat | The time the container pull started |

| pullstoppedat | The time the container pull stopped. The diff from pullstartedat represents how long the pull took to start. |

| revision | The task revision. |

| taskarn | The full task ARN |

You can also view the above tag information at Resources > Host > Applications in the List of Active Containers table. Make the Machine Tags column visible; it is hidden by default.

Fargate Container Information

You can find container information at Resources > Host > Applications in the List of Active Containers table. The container ID is available, but AWS currently does not expose underlying infrastructure.

To view tags, make the Machine Tags column visible; it is hidden by default.

The containers listed in Container Image Information are application containers. If you used the sidecar deployment method, the sidecar container itself is not displayed in this table because it is not running and has no runtime or cost associated with it. The Lacework application, however, runs as a process inside your application container, so it is visible in the Applications Information table (search for datacollector) because there is no running container for the Lacework agent.

Additional Notes

This section provides additional clarification about Lacework behavior with Fargate deployments.

File Integrity Monitoring (FIM)

By default, Lacework does not perform file integrity checks within containers. For a Fargate-only environment, there is no information under the Resources > Host > Files (FIM) menu. In an environment containing Fargate and non-Fargate deployments, the expected information displays. The checks are not performed by default because they are expensive for CPU and memory.

FIM will default to off in AWS Fargate, but you can enable it through runtime configuration.

Enable FIM through config.json

You can configure FIM through the agent configuration file (config.json). FIM runs in Fargate immediately following a cooling period. The default cooling period is 60 minutes. However, if you want to run it immediately, you can set the cooling period to zero in the config.json file as follows:

{"fim":{"mode":"enable", "coolingperiod":"0"}

Enable FIM as an Environment Variable

You can configure FIM as an environment variable in the ECS task definition:

{

"containerDefinitions": [

{

"environment": [

{

"name" : "LaceworkConfig",

"value" : "{\"fim\":{\"mode\":\"enable\", \"coolingperiod\":\"0\"}}"

}

}

],

}

Run Application as Non-Root and Agent as Root

You can run the Fargate application as an underprivileged application user while invoking the agent as root in cases where you cannot run the application as root.

Ensure passwordless sudo is enabled in your container by including a statement such as the following in your Dockerfile:

RUN apt-get update && apt-get install -y sudo && \

usermod -aG sudo user && \

echo '%sudo ALL=(ALL) NOPASSWD:ALL' >> /etc/sudoersnote

The user should not have any restrictions to run commands with sudo privileges. For example, having the following restriction for the user named user in

/etc/sudoers.d/userswill prevent the agent from starting properly.user ALL=(ALL) NOPASSWD: /var/lib/lacework-backup/lacework-sidecar.shIn the

containerDefinitionssection of your task definition, add theSYS_PTRACEparameter as shown below to enable the agent to detect the processes and connections of applications in the container that are running under a user other than the root user."linuxParameters": {

"capabilities": {

"add": ["SYS_PTRACE"]

}

}In your task definition, invoke

lacework-sidecar.shwith sudo, as in this example:sh,-c,sudo -E /var/lib/lacework-backup/lacework-sidecar.sh && /docker-entrypoint.shThe

sudo -Ecommand in the example enables the agent to read the environment variables defined in AWS.

Use Sidecar with a Distroless Container

Starting with Agent v6.0, you can use the sidecar-based deployment method along with a distroless application container. The term distroless means that the application container does not contain a libc runtime or other common Linux utilities.

Modify the

ENTRYPOINTspecified for the application container in the Sidecar-Based Deployment steps described above to:/var/lib/lacework-backup/distroless/bin/sh,/var/lib/lacework-backup/lacework-sidecar.shIf you are editing the Task Detection JSON directly, this will be:

"entryPoint": [

"/var/lib/lacework-backup/distroless/bin/sh",

"/var/lib/lacework-backup/lacework-sidecar.sh"

]In the application container definition, set the environment variable

LaceworkDistrolesstotrue.Ensure that the application container has a

/etc/passwdand/etc/groupmapping for UIDs and GIDs. If these files are not present, the distroless sidecar will use the defaults from Alpine Linux.

note

At runtime, a /lib/lib-musl.so.1 shared library and a /etc/ssl/cert.pem certificate bundle will be soft linked into the application container.

Event Triage

Lacework has the following methods for accessing Lacework events:

- Alert channels such as Email, Jira, Slack

- Lacework Console: Events

Alert Channels

Click View Details → to load the Event Details page in your default browser.

Lacework Console: Events

Click Events and set your desired time frame.

A summary of the currently selected event is available in the Event Summary in the upper right quadrant of the page.

This page presents the following major launch points:

- Event Summary, you can review high level event information. In the above figure, the description contains a destination host, a destination port, an application, and a source host. Each of these links provides a resource-specific view. For example, if you click the destination host, you can view all activity for that destination host seen by the Lacework Platform.

- Timeline > Selected Event > Details, this opens the Event Details page. This is the same page that opens when you click the View Details link from an alert channel.

Triage Example

The following exercise focuses on the Event Details and covers the key data points to triage a Fargate event.

The 'WHAT'

Expand the details for WHAT.

Header Row

| Key | Value Description | Notes |

|---|---|---|

| APPLICATION | What is running | None |

| MACHINE | The Fargate ARN:task for the job | Machine-centric view |

| CONTAINER | The container the task is running | None |

Application Row

| Key | Value Description | Notes |

|---|---|---|

| APPLICATION | What is running | None |

| EARLIEST KNOWN TIME | First time an application was seen | Useful for lining up incident responses with timelines in non-Lacework services. |

Container

| Key | Value Description | Notes |

|---|---|---|

| FIRST SEEN TIME | First time a container was seen | Useful for lining up incident responses with timelines in non-Lacework services. Useful for spot checks of your expected container lifecycle versus what is running. |

The 'WHERE'

Expand the details for WHERE. There is a WHERE section if the event is network related.

| Key | Value Description | Notes |

|---|---|---|

| HOSTNAME | Pivot to inspect the occurrence of the host activity within your environment | None |

| PORT LIST | Ports activity was seen over | Context. Do you expect this service to connect over these ports? Are there any ports that are interesting, such as non standard ports for servers. |

| IN/OUT BYTES | Data transmitted/received | Inferring risk based on payload size. |

Investigation

High level situational context that relates to the application deployment and your knowledge of its expected behavior.

Was the application involved in the event from Packaged software?

In this case, the event is for nginx and tomcat. If you install tomcat / nginx using the package manager, this is marked as yes. If you install tomcat / nginx using the package manager and this is marked no, investigate if there was a change to how you build your container. If there is no change to your build process, investigate how a non-packaged version was installed.

Has the Hash(SHA256) of the application been involved in an event change?

Was the application involved altered / replaced? If so, check your build and upgrade process. It is typical that containers will not see application updates. A change here warrants continued investigations to determine the cause of change.

Has the application involved in the event been running as Root?

Indicator of the scope of privilege the application has. If running as root is not part of your build processes, check for an alteration to your build processes and privilege escalation.

Has the application transferred more than the median amount of data compared to the last day?

Consider the amount of data transferred versus what is expected by the application.

Related Events Timeline

The timeline is useful for building context for events around the Event Details you are currently investigating.

Summary

Using the above data, you should be able to create the following triage process.

Determine the scope of the event:

- Containers

- Applications

- Hosts

- arn:task

Drill down into the event relationships to get context across your infrastructure secured by the Lacework platform.

Determine what is expected as a part of your build processes and what is unexpected.

From this path, continue to narrow down if there was a change to build processes or a net new build process or infrastructure event/service. For these scenarios, focus on updating the application, build, and network configurations.

For scenarios where there is a risk of compromise, focus on the timeline that led to the potential compromise, investigate the entities involved, and create a structured story that enables rapid IR and processes updates.